How Googlebot executing JavaScript will affect you

A few weeks ago I did a piece of work looking at the impact of Google’s robots executing JavaScript on pages, so I thought I’d do the decent thing and post it up here for you all to read as well.

I’m going to do a massive bit of oversimplification here and explain how Google works first. Anyone with any experience please don’t shout at me that this is so simple, but we need to start from the beginning:

Googlebot

Googlebot is a robot that goes to page after page on the internet. It’s job is to look at the page, find the content (text, images, video, et), catalogue it and then move on to the next page in its list.

When you come to a page, you see something like this:

Traditionally when Googlebot came to the site, it wouldn’t see this as it wouldn’t render the page. Instead it would appear as you’d imagine the page to when you view the source code:

If you view the source of a page then you’ll see something similar to this. Effectively this tells your browser what the page should look like, by giving it all the words, the links, where the images are and then pointing at any other files that are important for the browser.

The main two of these are Cascading style sheets (CSS) and JavaScript (JS):

- Cascading Style Sheets are usually one main file, but sometimes several files that tell your browser how to organise the words and images on the page: which font to use, which size font, what colour font, what colour background, etc. If I want everything that I set up as Title 3 to appear in the same way on all pages, then I can dictate this from the style sheet by including a bit in there that says everything tagged as Title 3 should appear in this way.

- JavaScript is way of making stuff happen dynamically on the page: It’s a set of commands that allow the web browser to do stuff to the page after it has been rendered. Analytics tools work via JavaScript – a bit of code tells the browser to send a ping off to a web server to say that the page has been loaded completely. They can also do all manner of other things like making things float on a page as you scroll, show up adverts in particular places on pages and all sorts of other things

Traditionally Google would know that the CSS and JavaScript existed, it might download it, but it wouldn’t execute anything in it. More on that in a minute.

Algorithm

Having collected all that information about each page, Google then does its magic ‘algorithm’.

The magical algorithm is a series of 100s of different factors that Google runs through each time you do a search to work out which pages are the most valuable to give the results. These factors include things like the words appearing on the page, the words appearing in links pointing to the page, the page loading quickly, the words appearing in the title tag of the page, etc, etc. If I knew what they all were and how each was weighted then I’d be making a fortune selling my services.

Fortunately a lot of it is known because clever people have done a load of testing to see which things make a difference. Google gets around this problem of reverse engineering pages by changing their algorithm frequently (Panda, Penguin and Hummingbird) – adding in new factors and removing old ones which no longer show that a page is valuable or just simply changing the weighting of certain factors. What hadn’t really changed before was the way that it crawled the page.

Executing JavaScript

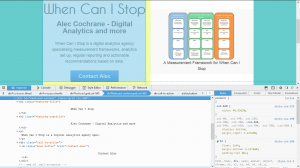

Until now that is (I say ‘now’, they officially announced it about 9 months ago). Now Google is telling you that it is running JavaScript and it is now looking at the page as you would do if you did an ‘inspect element’ command on a section of a page.

This means that it will be able to see what you do with your JavaScript and CSS. This is a clever move by Google because it wants to replicate the way that a user visits a page.

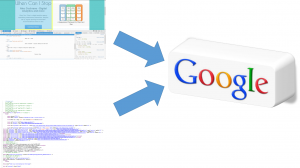

Of course it isn’t just that simple. Google isn’t just running like this, it is doing it both the new way and the old way:

This means that you can’t suddenly just implement your entire page using JavaScript (apart from the fact that there are privacy sensitive people who block JavaScript), because Google still wants to see the stuff that is in the source code. But it does mean you can start doing stuff in JavaScript that you want Google to pay attention to: you can put content in it, you can put links in it, you can put redirects in it.

Moreover the downside is stuff that you did in the past that you didn’t want the search engine to see, now it can and it will index based on that. JavaScript overlays for whatever reason (registration, email newsletters, adverts, etc) will all now be seen by the search engine.

What we don’t know is that magical algorithm and how much weight Google puts onto the stuff it finds. I suspect that this early into the process, Google doesn’t know what it is going to do with it either, so the old argument should still stand:

Create unique, interesting and relevant content, written in a way that the audience in question will search for it and it doesn’t matter how you present it to the search engine.

Leave a Reply