Putting GA4 into BigQuery

I’ve been doing a few starter posts on GA4 and I’m about to jump ahead of myself, but we’ll come back to some more GA4 posts soon. This one is going to be a post on how to set up BigQuery in GA4, which looks like it should be simple, where it used to be complicated, but there are a number of pitfalls that meant it took me longer to do than it should have done.

One thing I will note right up here is that the default connector outlined below only has data from the point you set it up onwards, it does not port across any historical GA4 data. That means the sooner you set it up the better.

Why BigQuery?

It’s a bit of a shame, but as you will have noted before, there are just large numbers of things that you could do in GA, but that you can’t do in GA4.

The number one of these for me is that segmentation in GA4 is severely limited. What do I mean by that? In the old world you could create segments in GA and then apply that to the data – you could then import those segments into data studio and use them there too. The segments could be hit based, session based or user based.

In the new GA4 world the hit based filters still exist: you can apply a filter at any point to any report base on any dimension, even if it isn’t in the report. For user based segments, you can try and replicate them by coding them into the page and that works well to a certain degree, but you hit limitations pretty quickly. For things you just want to do on the fly then you are out of luck. In the normal reporting interface anything session based is impossible.

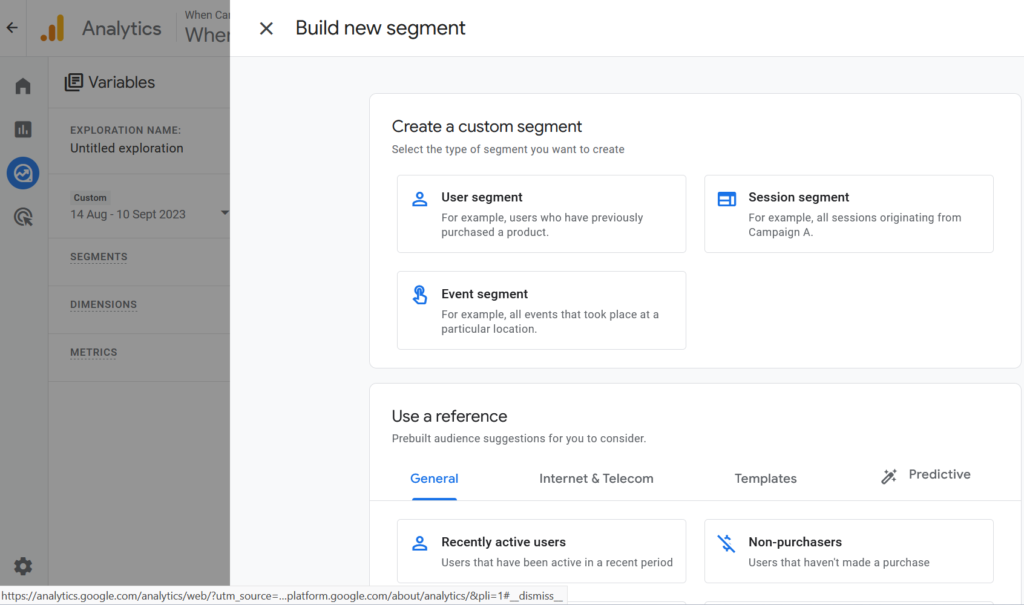

In the new explorer interface you can create segments and it works quite well:

The trouble with it is that you are limited to the reports you can get in explorer, they aren’t user friendly and they don’t export to Looker studio.

But in BigQuery you can use SQL to create these sorts of segments and then publish the results to Data Studio, which is a big plus for me.

(Of course to be ‘big’ data you should really also be thinking about how you link it to other data sets, so that is also a possibility: just make sure you have your primary key in your GA4 data – whether that is a transaction id, user id, lead id, etc.)

How much does BigQuery Cost?

There are a lot of calculators out there to work this one out, so I’m not going to pretend to replicate those or offer a calculator – you can look here or here. In my case I’ve worked out that after about 2 years it is going to cost tens of pence per month for a site with about 300k events per month.

The first thing to say is that in the old world of GA then set up was more complicated and could cost money if you had to pay for connectors. In GA4 there is an inbuilt connected which you can use in the Google Admin, avoiding the need for third party apps.

What I will say is that there is a bit of confusion over costs and I’ll try and straighten that out a bit – the costs broadly fall into two categories – one is how much data you put in there from GA4 (which you don’t have a lot of control over because you are going to put it all in) and the other is how much processing of data you do after that (which you do have control over because it is the SQL queries you write to get the results you want).

In terms of storage – you get 10GB free each month before you get charged. According to my calculation, the site I’m working with had about 18k users, generating 300k events (with some events containing custom dimensions) and that will cost me about $0.38 per month after 3 years. So far I am two months in and haven’t incurred a cost at all.

The second cost is processing and for that I haven’t incurred a cost and looking at my billing it seems unlikely I will based on what I’ve done so far (but with a caveat that I could do more). For this you could go way overboard, but at the moment, for my session based queries, running a session based segment on a full data set is about 273MB and you get 1TB free per month. After running once you can set off a job to create a new summary table that you use for your Data/Looker Studio and shouldn’t cost too much (you can then create a schedule for daily updates. I’ll do an update on this in the future, but the daily runs shouldn’t use up as much data even on aggregate (about 90MB for a whole month).

How to set up BigQuery

Right, so you have decided you want to do it and you are going to set up, how easy is it? Well in theory it should be simple – you create a Google Cloud account, put in your billing details, log in to GA4 and set the connector up.

Of course it isn’t quite that easy, but let’s do it.

1. Firstly go to Google Cloud and set up an account with billing enabled (you should be able to a ‘try it for free’ to get some free credit on a free trial). Don’t worry, you’ll keep an eye on costs so that you won’t be billed horrifically (you can set up a budget alert and cancel it if it suddenly gets really expensive.

2. When you’ve created that, go to the home dashboard, select your project from the drop down at the top and then choose ‘new project’:

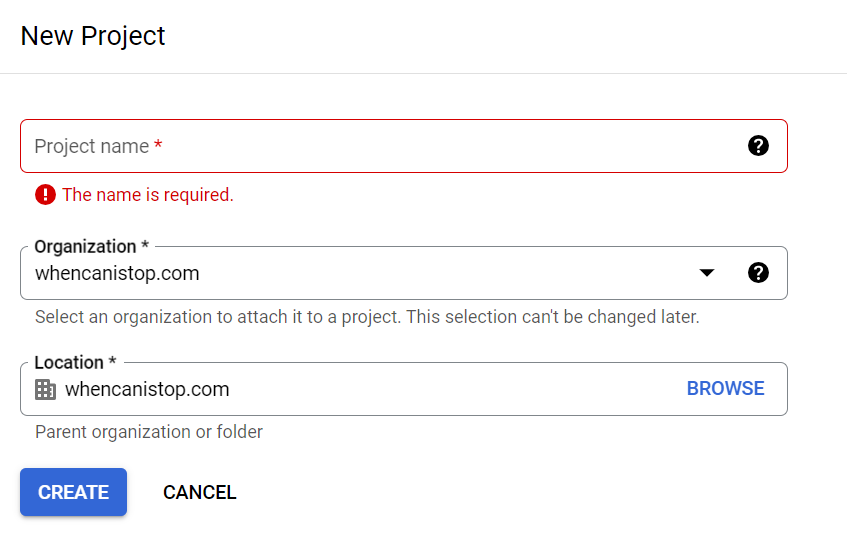

3. On the next screen give the project a sensible name and if you want to you can edit the ID so that it matches the name or is something sensible that you can remember (I have a project up there for When Can I Stop that has a rather silly name, which in hindsight I wish I’d changed):

4. The project I’ve created already has billing associated with it (that is why I had to choose an organisation in the list). If you haven’t done this before then you might need to upgrade your account to a billing one by navigating to your project’s BigQuery page. Even if you have, then you should navigate to the project’s BigQuery page. Firstly, choose your project using the drop down, secondly, search for BigQuery in the search box and click on the first result (or use this link). Here you can click on the upgrade button to make this a paid BigQuery account.

5. The project is now created, but it doesn’t have anything in it – this is Google Cloud and you need to tell it that you are going to put a BigQuery project in it, by enabling the BigQuery API. Navigate there either from the Google Cloud Home page or through this link.

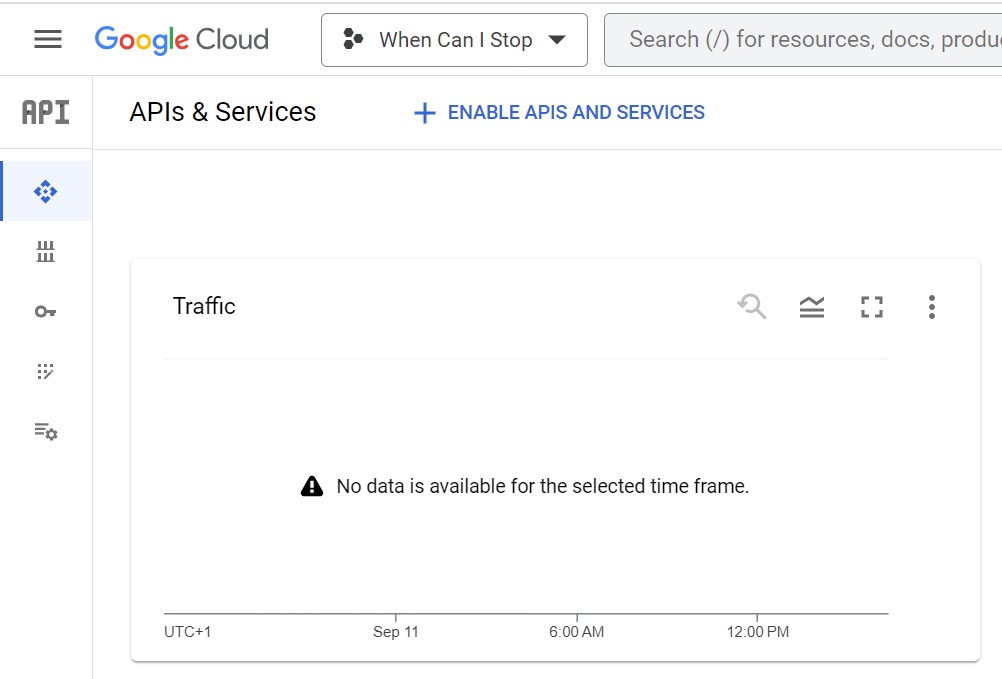

6. You should have a screen a bit like the below where you can enable APIS and services at the top (make sure the drop down has the right project in it):

Once you’ve clicked enable APIS and services, search for the BigQuery API, click through to it’s page and then click ‘manage’ (or go here).

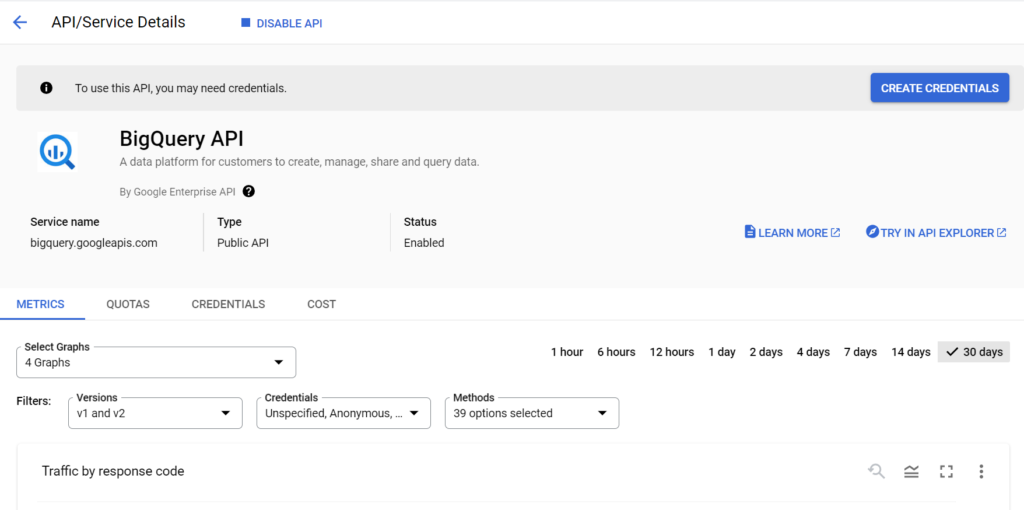

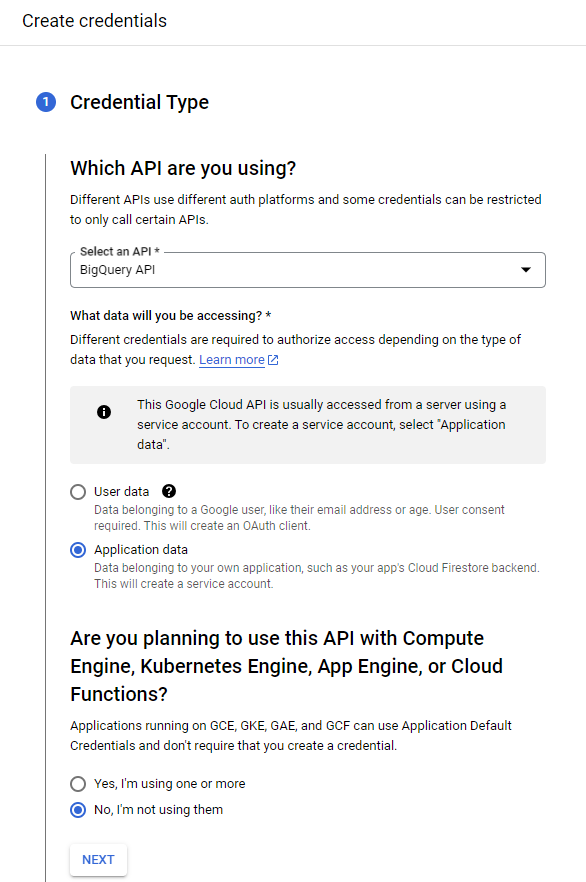

7. ‘Create Credentials’ and enter that you are using Application data not user data (hopefully there is no user data in your GA4!) and that you are not using the API engine with those third parties:

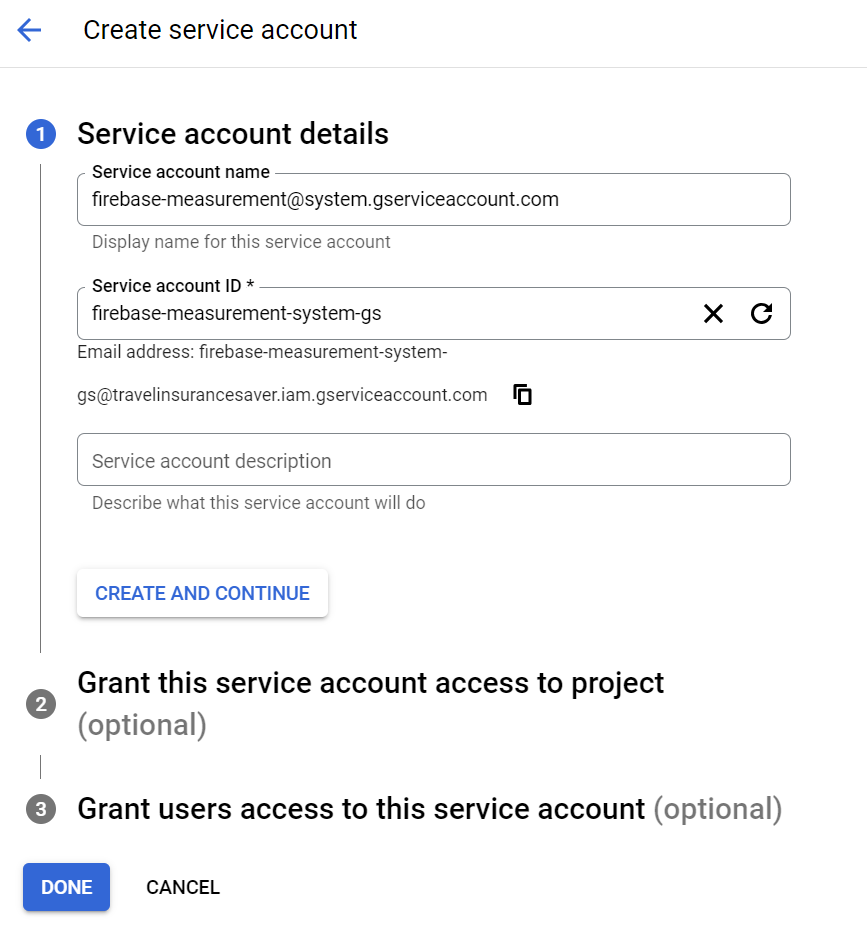

8. On the next screen choose to have the Service account name as the Firebase account (this is created as part of the process of pushing GA4 to BigQuery and will be a user in your project): firebase-measurement@system.gserviceaccount.com and that should pre-populate the Service Account ID:

9. Give the account a role (normally ‘owner’ will work, but you might want to choose others if you have done all this before) and then I leave the ‘access’ roles as blank so that they are default.

10. Having created that, your account is now ready to accept data and you can move to GA4 to finish the process. I’d navigate back here to the BigQuery page to make sure any details you need are there.

How to transfer data from GA4

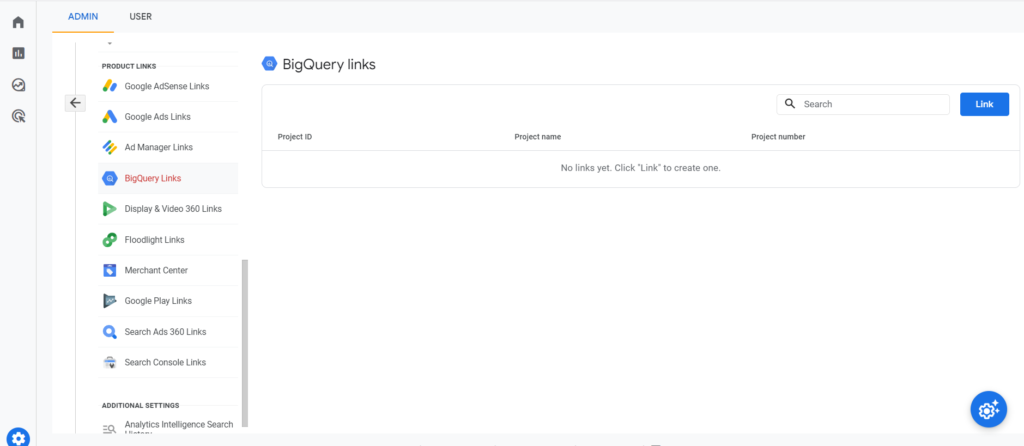

Having set up the BigQuery account, now it is time to hop into GA4 and set up the process from that side. In the admin console you should be able to find ‘BigQuery Links’ towards the bottom of the page and then you can click through to that to see the link.

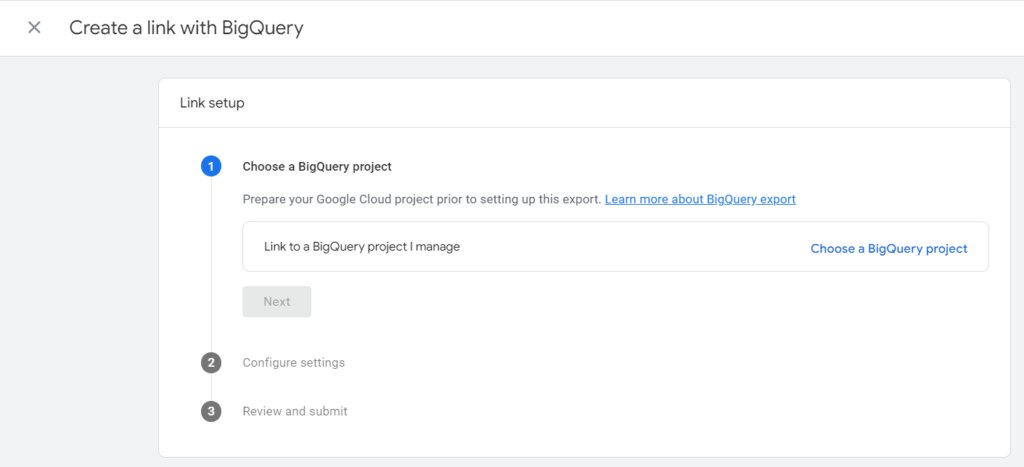

In here you can create a new ‘link’ and select your newly created BigQuery project:

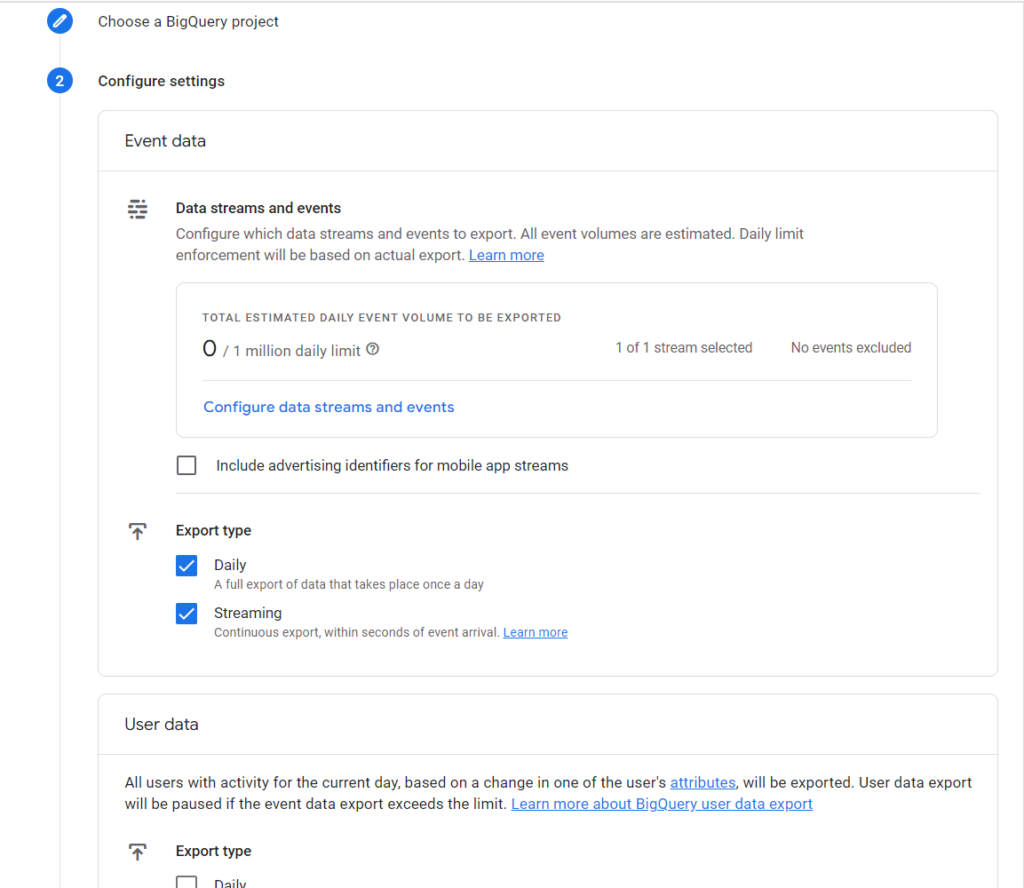

Now it gets to a slightly complicated bit. You can export daily data (which I’ve discovered quite often takes most of the next day before it arrives in the data set) and you can export ‘streaming’ data – which will give you current day’s data as it arrives before being deleted and replaced with the next day at the end of the day. I always enable both, but the streaming data tends to be ignored because dealing with two sets of data tables in a query is a pain (but you may want ‘live’ data).

I also choose not to do user attributes (but that is mostly because I’m not really using them and they feel like they would make the data set larger in an unnecessary way).

Finally click on submit and there you go – the link is created.

What data will you see in BigQuery?

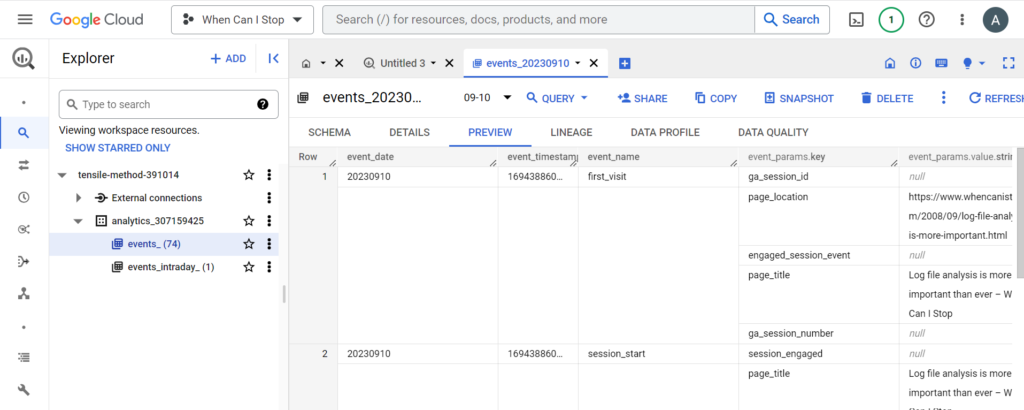

Having done all that, you probably won’t see any data in BigQuery for a day or two, then in your reports you should start to see a series of tables with events in them from the point that you set up the link (if you want historical data in there then you need to use third party apps):

The explorer on the left tells you that there are 74 (currently) day’s worth of data and here is a preview of what it looks like – a row per event with all the column headers and data in it. Each day has a table in the format: ‘events_20230910’ and there is an intraday report with today’s data in it.

This, of course, is where the fun begins when you start creating your queries!

Leave a Reply