Why you can’t compare figures from different Analytics tools

I was prompted to write this post by Hitwise (of all people) who told me that they were running a beta test that shows a total number of visits. One of the questions in the test was “Did the reports show the results you expected?” The answer, was of course not. I measure my data based on an analytics tool and it measures a completely different set than Hitwise. Not only are they measuring different things to start with, they measure them in a different way, process them in a different way, exclude things in a different way, extrapolate in a different way, etc. It’s the equivalent of comparing eating an apple with a fork with eating an orange with a spoon. And it doesn’t matter anyway.

Why doesn’t it matter? Well let us go back almost a year to a post I wrote on the difference between accuracy and precision. Remember it? In it I said:

Web analytics is all about trending over time. You can find out how something it doing at the moment and you can get some insight into that – where people are coming from, where they are going to, etc. But really what you want to do is try changing something and seeing the impact that it has one your data

and:

Well for one I won’t be worrying about the accuracy of my figures. Do I have 50 or do I have 32? It doesn’t matter. When I go from 50 to 51 though, I want to know that this is one additional visit, or whatever in that time period.

I even gave a practical example of this when I looked at some data modelling and statistical testing I had been doing on the site I work on.

These are all well and good, but they don’t look at anything other than one tool. There is a reason for that:

Web Analytics tools will never be comparable to each other in total numbers because none of them are accurate.

And I’m including Hitwise in my broad, sweeping statement.

Because web analytics tools are inaccurate in so many different ways to each other, they should never, ever be compared to each other. But it still doesn’t matter as long as they are all precise.

I know the media wants to take up its role of reporting which website is better than the other one based on ABCe audits. But it doesn’t work. Each website is measured by a different calculation, even if they have the same analytics tool:

- They will block different IP addresses (because everyone should block their internal IP addresses so that they can’t accidentally over inflate their figures by doing silly things like unit testing against live)

- They have different pages. This may seem insignificant, but if the pages are significantly bigger and take longer to load then (assuming you’ve got your tags at the bottom of the page like you should do) it’s possible that some tags won’t load.

- Different types of user may be more security conscious and more likely to block your cookie.

- Different types of website might use different types of cookie, especially if they are sitting on multiple domains.

- The processing done by the tool in the back end is different. Processed peas go through a process shock horror! It turns out that your analytics system takes all the data that it collects and processes that into your page views, visits, visitors, etc. Funnily enough, they don’t all do it in the same way (if they did, they would all be indistinguishable from each other).

- The filtering of robots and spiders is done differently by different systems. HBX, I remember being told, was quite clever and could remove things automatically that were seen as not human simply by their behaviour (rather than any particular technical attribute).

- I could go on with more and more differences, but I won’t.

|

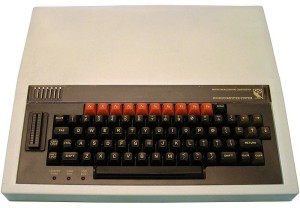

| Your Average Guardian reader is going to be accessing content in a different way … |

|

| … to your average The Sun reader (I jest, of course) |

So does your ABCe audit matter at all then?

Well actually it turns out it does. Firstly you are probably quoting your figures to advertisers, press officers, the guy on the tube next to you call Bernard and all sorts of people. What if you were making them up? Wouldn’t those people be really annoyed? Well the ABCe do the sensible thing of making sure that you don’t make them up by double checking them. Then they do another sensible thing by making sure you don’t over inflate those figures by cheating (by counting everyone twice, making all the visits yourself, etc, etc).

They also provide the likes of you and me a sensible place to look at how they have been performing over time. Of course this is slightly tinged if you take into account that some websites change their measurement system (Protip: the ABCe include a little bit at the end that says the counting method), but you can still perform some measurement over time. Whether you want to or not is a different matter.

Hitwise

Going back to Hitwise – one of the advantages that Hitwise has is that it does allow you to do comparisons from site to site, as you are not comparing one tool’s figure with another. You can make changes to your website (or watch as others make changes to theirs) and see the effect on each other.

What you cannot do though, is compare the figures in Hitwise to that of another tool. This is the reason why Hitwise doesn’t need to put total visit numbers in.

Interestingly, as an aside at the end, Comscore and Nielsen who do similar things to Hitwise (but with a smaller sample size in the UK) are both trying to come up with a way of integrating their panel data with real analytics data. This is (presumably) a way of getting around the issue that their data isn’t accurate. Given the size of their panels it may not be very precise either, but I still don’t think that it is worth them doing it. Accuracy is the wrong measure in this industry – we need precision. Nielsen and Comscore would be far better off looking to increase their sample size to improve precision (IMO).

Leave a Reply